Optimizing Training Data Allocation Between Supervised and Preference Finetuning in Large Language Models

Source: MarkTechPost Large Language Models (LLMs) face significant challenges in optimizing their post-training methods, particularly in balancing Supervised...

This AI Paper from Weco AI Introduces AIDE: A Tree-Search-Based AI Agent for Automating Machine Learning Engineering

Source: MarkTechPost The development of high-performing machine learning models remains a time-consuming and resource-intensive process. Engineers and researchers...

How AI is Transforming Journalism: The New York Times’ Approach with Echo

Source: Unite.AI Artificial Intelligence (AI) is changing how news is researched, written, and delivered. A 2023 report by...

Google’s AI Co-Scientist vs. OpenAI’s Deep Research vs. Perplexity’s Deep Research: A Comparison of AI Research Agents

Source: Unite.AI Rapid advancements in AI have brought about the emergence of AI research agents—tools designed to assist...

What are AI Agents? Demystifying Autonomous Software with a Human Touch

Source: MarkTechPost In today’s digital landscape, technology continues to advance at a steady pace. One development that has...

Moonshot AI and UCLA Researchers Release Moonlight: A 3B/16B-Parameter Mixture-of-Expert (MoE) Model Trained with 5.7T Tokens Using Muon Optimizer

Source: MarkTechPost Training large language models (LLMs) has become central to advancing artificial intelligence, yet it is not...

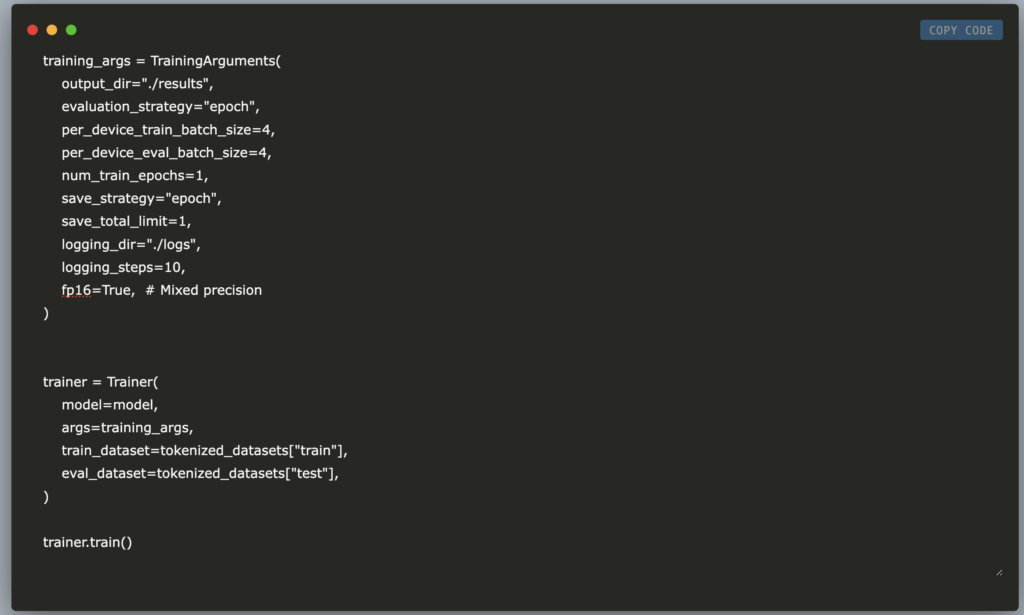

Fine-Tuning NVIDIA NV-Embed-v1 on Amazon Polarity Dataset Using LoRA and PEFT: A Memory-Efficient Approach with Transformers and Hugging Face

Source: MarkTechPost In this tutorial, we explore how to fine-tune NVIDIA’s NV-Embed-v1 model on the Amazon Polarity dataset...

Sony Researchers Propose TalkHier: A Novel AI Framework for LLM-MA Systems that Addresses Key Challenges in Communication and Refinement

Source: MarkTechPost LLM-based multi-agent (LLM-MA) systems enable multiple language model agents to collaborate on complex tasks by dividing...

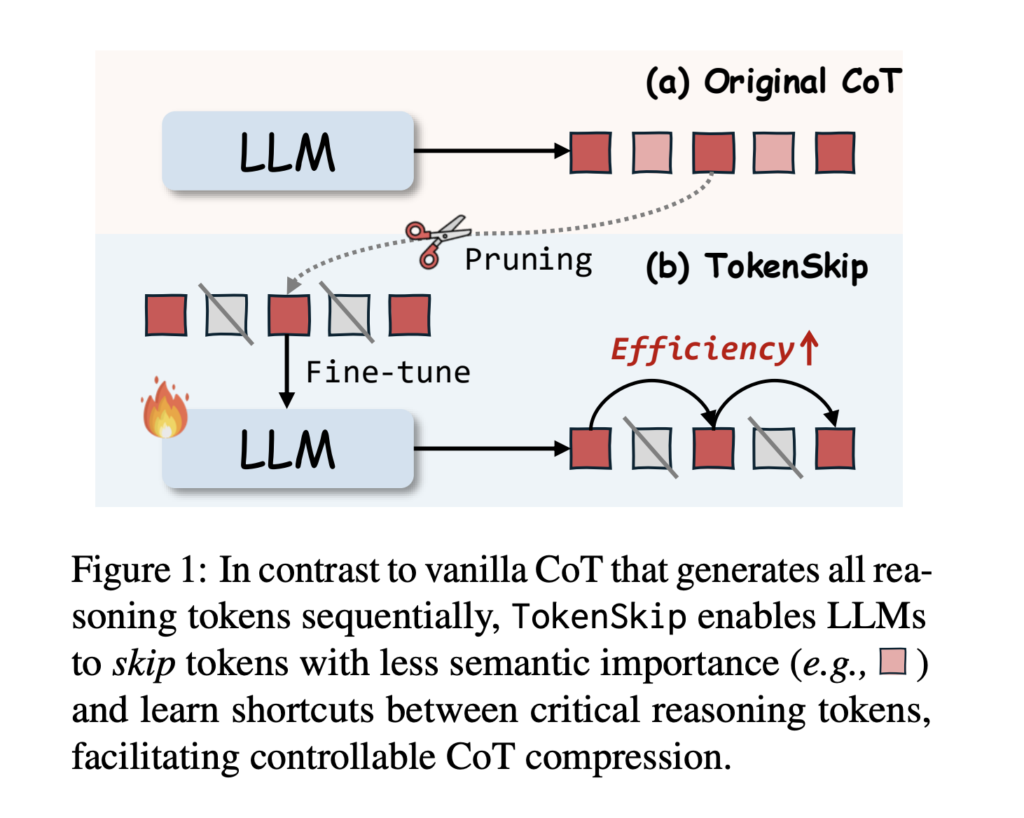

TokenSkip: Optimizing Chain-of-Thought Reasoning in LLMs Through Controllable Token Compression

Source: MarkTechPost Large Language Models (LLMs) face significant challenges in complex reasoning tasks, despite the breakthrough advances achieved...