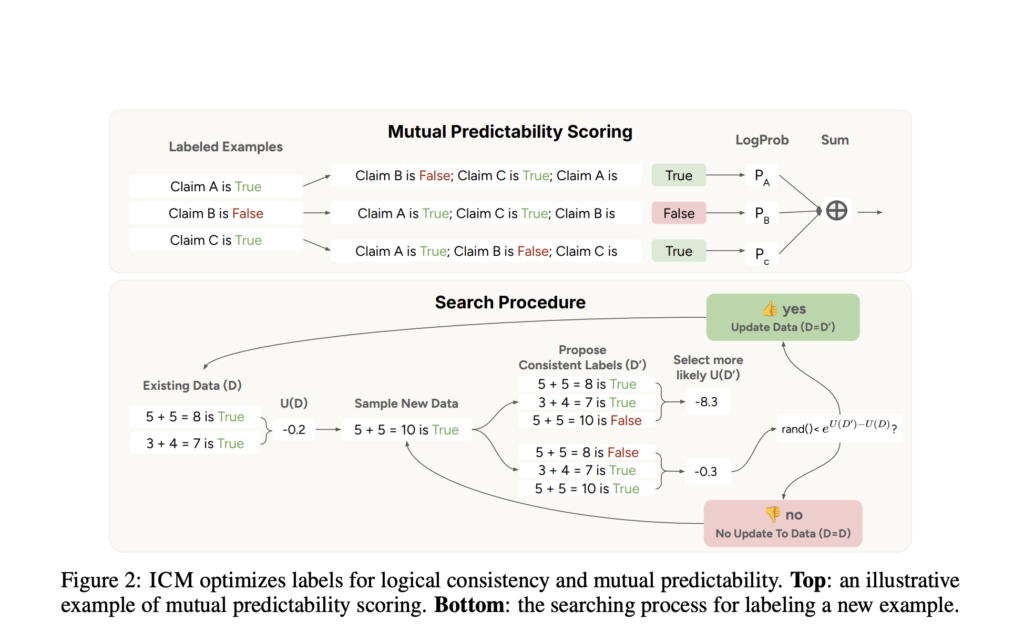

Internal Coherence Maximization (ICM): A Label-Free, Unsupervised Training Framework for LLMs

Source: MarkTechPost Post-training methods for pre-trained language models (LMs) depend on human supervision through demonstrations or preference feedback...

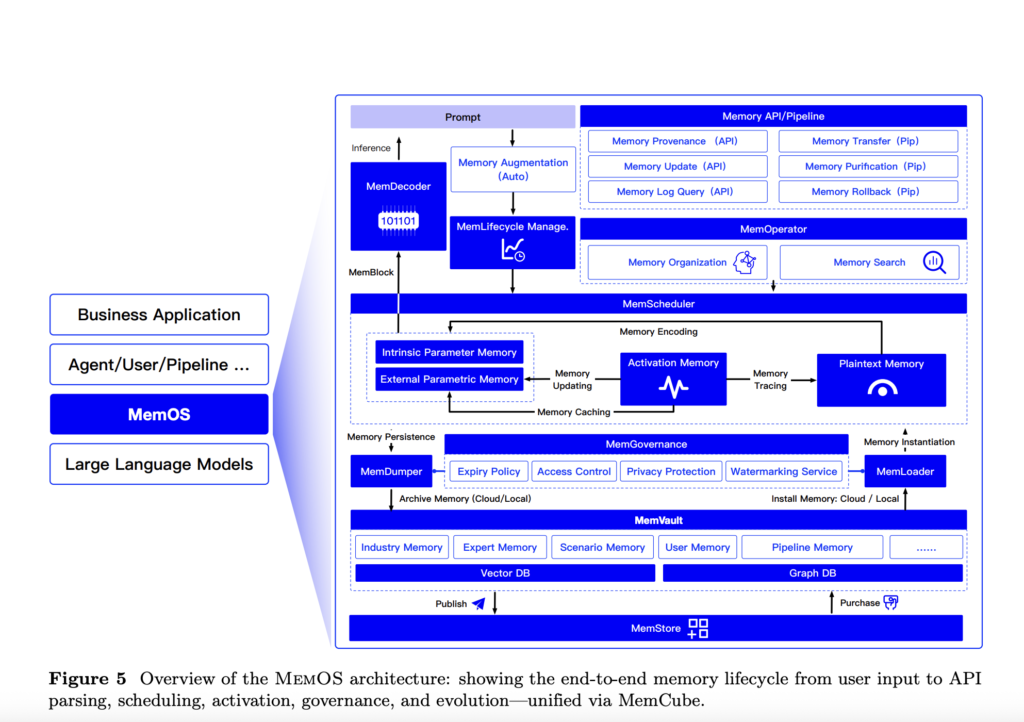

MemOS: A Memory-Centric Operating System for Evolving and Adaptive Large Language Models

Source: MarkTechPost LLMs are increasingly seen as key to achieving Artificial General Intelligence (AGI), but they face major...

Sakana AI Introduces Text-to-LoRA (T2L): A Hypernetwork that Generates Task-Specific LLM Adapters (LoRAs) based on a Text Description of the Task

Source: MarkTechPost Transformer models have significantly influenced how AI systems approach tasks in natural language understanding, translation, and...

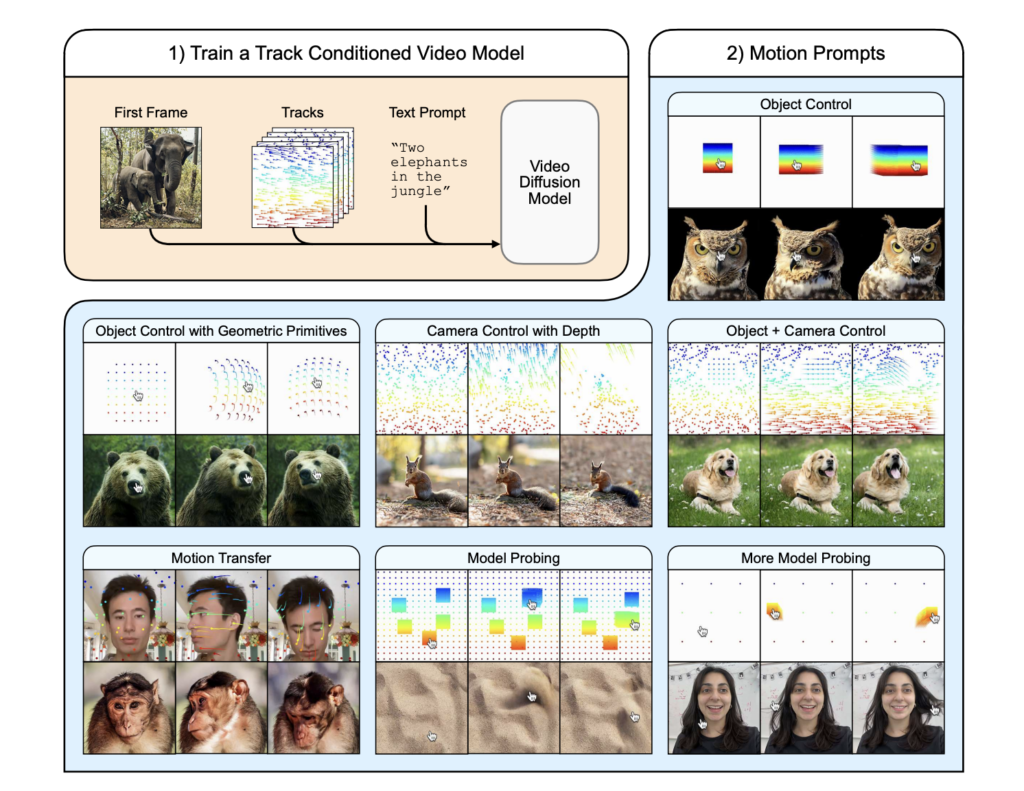

Highlighted at CVPR 2025: Google DeepMind’s ‘Motion Prompting’ Paper Unlocks Granular Video Control

Source: MarkTechPost Key Takeaways: Researchers from Google DeepMind, the University of Michigan & Brown university have developed “Motion...

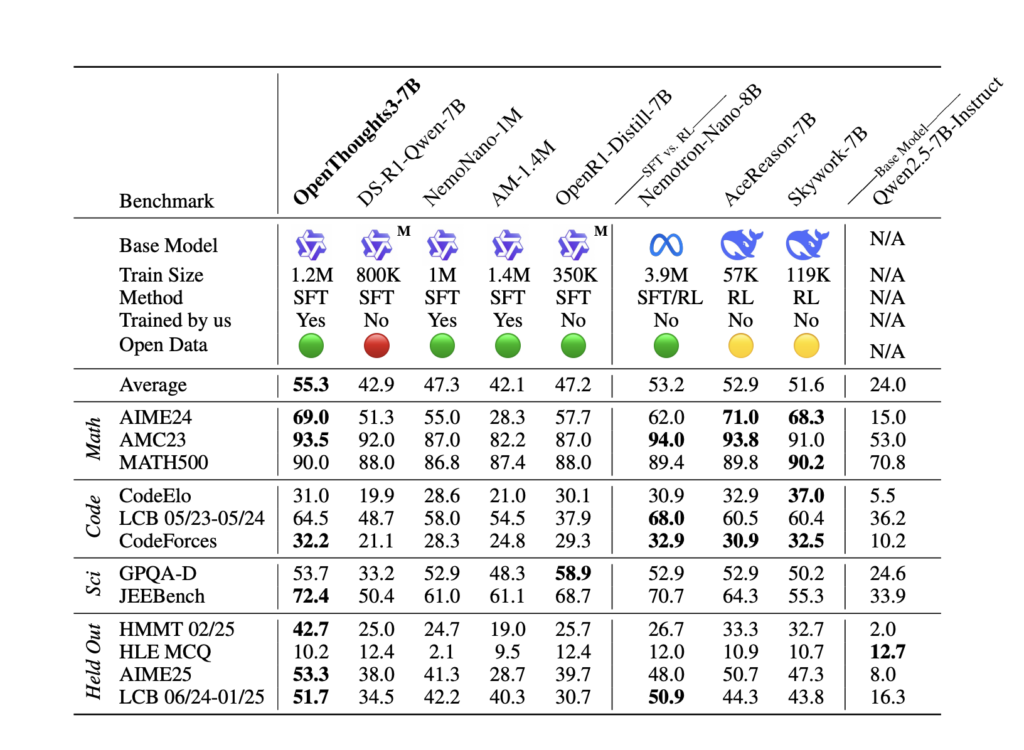

OpenThoughts: A Scalable Supervised Fine-Tuning SFT Data Curation Pipeline for Reasoning Models

Source: MarkTechPost The Growing Complexity of Reasoning Data Curation Recent reasoning models, such as DeepSeek-R1 and o3, have...