NVIDIA AI Releases UltraLong-8B: A Series of Ultra-Long Context Language Models Designed to Process Extensive Sequences of Text (up to 1M, 2M, and 4M tokens)

Source: MarkTechPost Large language mdoels LLMs have shown remarkable performance across diverse text and multimodal tasks. However, many...

LightPROF: A Lightweight AI Framework that Enables Small-Scale Language Models to Perform Complex Reasoning Over Knowledge Graphs (KGs) Using Structured Prompts

Source: MarkTechPost Large Language Models (LLMs) have revolutionized natural language processing, with abilities on complex zero-shot tasks through...

Google AI Introduce the Articulate Medical Intelligence Explorer (AMIE): A Large Language Model Optimized for Diagnostic Reasoning, and Evaluate its Ability to Generate a Differential Diagnosis

Source: MarkTechPost Developing an accurate differential diagnosis (DDx) is a fundamental part of medical care, typically achieved through...

Step by Step Coding Guide to Build a Neural Collaborative Filtering (NCF) Recommendation System with PyTorch

Source: MarkTechPost This tutorial will walk you through using PyTorch to implement a Neural Collaborative Filtering (NCF) recommendation...

Moonsight AI Released Kimi-VL: A Compact and Powerful Vision-Language Model Series Redefining Multimodal Reasoning, Long-Context Understanding, and High-Resolution Visual Processing

Source: MarkTechPost Multimodal AI enables machines to process and reason across various input formats, such as images, text,...

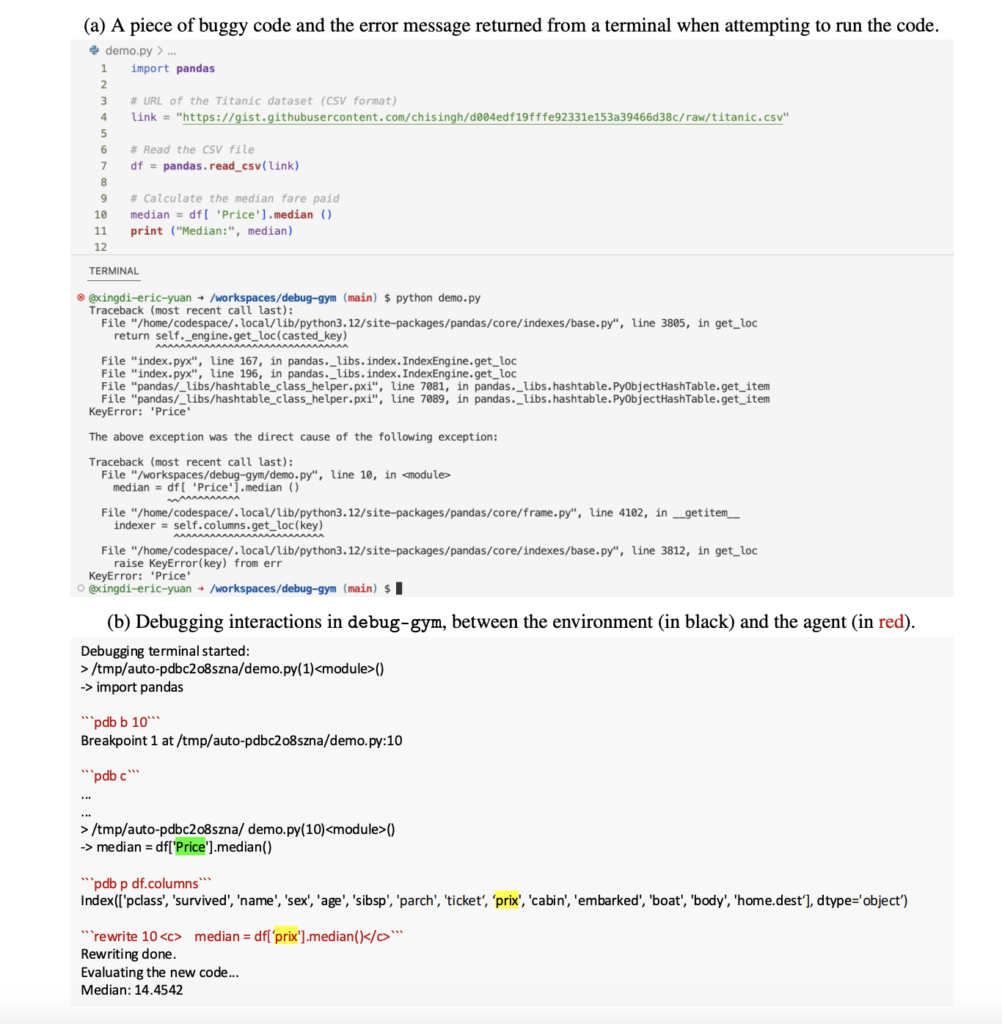

Can LLMs Debug Like Humans? Microsoft Introduces Debug-Gym for AI Coding Agents

Source: MarkTechPost The Debugging Problem in AI Coding Tools Despite significant progress in code generation and completion, AI...

This AI Paper from Salesforce Introduces VLM2VEC and MMEB: A Contrastive Framework and Benchmark for Universal Multimodal Embeddings

Source: MarkTechPost Multimodal embeddings combine visual and textual data into a single representational space, enabling systems to understand...

LLMs No Longer Require Powerful Servers: Researchers from MIT, KAUST, ISTA, and Yandex Introduce a New AI Approach to Rapidly Compress Large Language Models without a Significant Loss of Quality

Source: MarkTechPost HIGGS — the innovative method for compressing large language models was developed in collaboration with teams...

Nvidia Released Llama-3.1-Nemotron-Ultra-253B-v1: A State-of-the-Art AI Model Balancing Massive Scale, Reasoning Power, and Efficient Deployment for Enterprise Innovation

Source: MarkTechPost As AI adoption increases in digital infrastructure, enterprises and developers face mounting pressure to balance computational...

Balancing Accuracy and Efficiency in Language Models: A Two-Phase RL Post-Training Approach for Concise Reasoning

Source: MarkTechPost Recent advancements in LLMs have significantly enhanced their reasoning capabilities, particularly through RL-based fine-tuning. Initially trained...