The philosophical puzzle of rational artificial intelligence

Source: MIT News – Artificial intelligence To what extent can an artificial system be rational? A new MIT...

A Coding Implementation to Training, Optimizing, Evaluating, and Interpreting Knowledge Graph Embeddings with PyKEEN

Source: MarkTechPost In this tutorial, we walk through an end-to-end, advanced workflow for knowledge graph embeddings using PyKEEN,...

Microsoft Unveils Maia 200, An FP4 and FP8 Optimized AI Inference Accelerator for Azure Datacenters

Source: MarkTechPost Maia 200 is Microsoft’s new in house AI accelerator designed for inference in Azure datacenters. It...

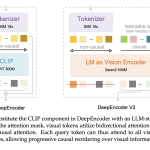

DeepSeek AI Releases DeepSeek-OCR 2 with Causal Visual Flow Encoder for Layout Aware Document Understanding

Source: MarkTechPost DeepSeek AI released DeepSeek-OCR 2, an open source document OCR and understanding system that restructures its...

A Coding Deep Dive into Differentiable Computer Vision with Kornia Using Geometry Optimization, LoFTR Matching, and GPU Augmentations

Source: MarkTechPost We implement an advanced, end-to-end Kornia tutorial and demonstrate how modern, differentiable computer vision can be...

Ant Group Releases LingBot-VLA, A Vision Language Action Foundation Model For Real World Robot Manipulation

Source: MarkTechPost How do you build a single vision language action model that can control many different dual...

Beyond the Chatbox: Generative UI, AG-UI, and the Stack Behind Agent-Driven Interfaces

Source: MarkTechPost Most AI applications still showcase the model as a chat box. That interface is simple, but...

Google DeepMind Unveils AlphaGenome: A Unified Sequence-to-Function Model Using Hybrid Transformers and U-Nets to Decode the Human Genome

Source: MarkTechPost Google DeepMind is expanding its biological toolkit beyond the world of protein folding. After the success...

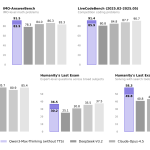

Alibaba Introduces Qwen3-Max-Thinking, a Test Time Scaled Reasoning Model with Native Tool Use Powering Agentic Workloads

Source: MarkTechPost Qwen3-Max-Thinking is Alibaba’s new flagship reasoning model. It does not only scale parameters, it also changes...

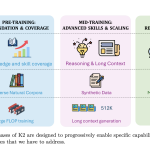

MBZUAI Releases K2 Think V2: A Fully Sovereign 70B Reasoning Model For Math, Code, And Science

Source: MarkTechPost Can a fully sovereign open reasoning model match state of the art systems when every part...