MiniMax AI Releases MiniMax-M1: A 456B Parameter Hybrid Model for Long-Context and Reinforcement Learning RL Tasks

Source: MarkTechPost The Challenge of Long-Context Reasoning in AI Models Large reasoning models are not only designed to...

ReVisual-R1: An Open-Source 7B Multimodal Large Language Model (MLLMs) that Achieves Long, Accurate and Thoughtful Reasoning

Source: MarkTechPost The Challenge of Multimodal Reasoning Recent breakthroughs in text-based language models, such as DeepSeek-R1, have demonstrated...

HtFLlib: A Unified Benchmarking Library for Evaluating Heterogeneous Federated Learning Methods Across Modalities

Source: MarkTechPost AI institutions develop heterogeneous models for specific tasks but face data scarcity challenges during training. Traditional...

Why Small Language Models (SLMs) Are Poised to Redefine Agentic AI: Efficiency, Cost, and Practical Deployment

Source: MarkTechPost The Shift in Agentic AI System Needs LLMs are widely admired for their human-like capabilities and...

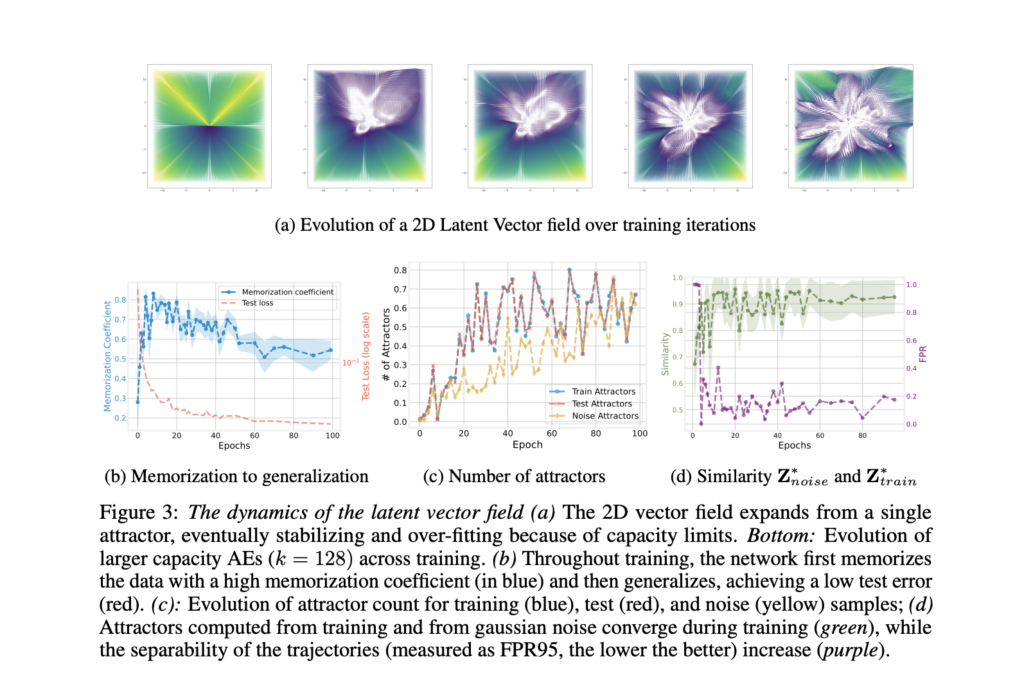

How Latent Vector Fields Reveal the Inner Workings of Neural Autoencoders

Source: MarkTechPost Autoencoders and the Latent Space Neural networks are designed to learn compressed representations of high-dimensional data,...

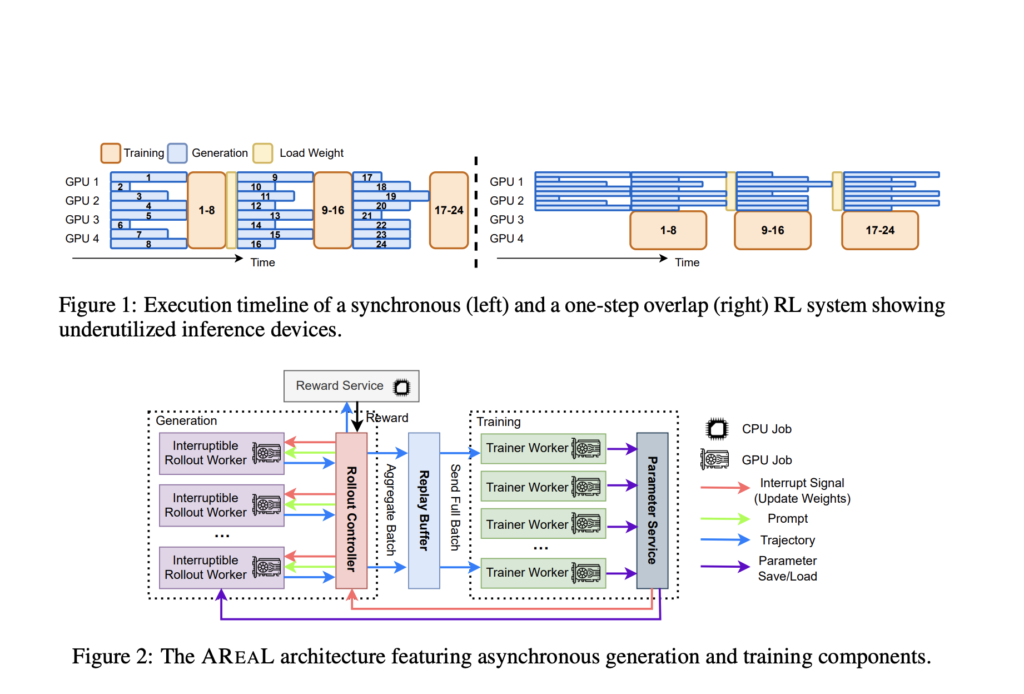

AREAL: Accelerating Large Reasoning Model Training with Fully Asynchronous Reinforcement Learning

Source: MarkTechPost Introduction: The Need for Efficient RL in LRMs Reinforcement Learning RL is increasingly used to enhance...

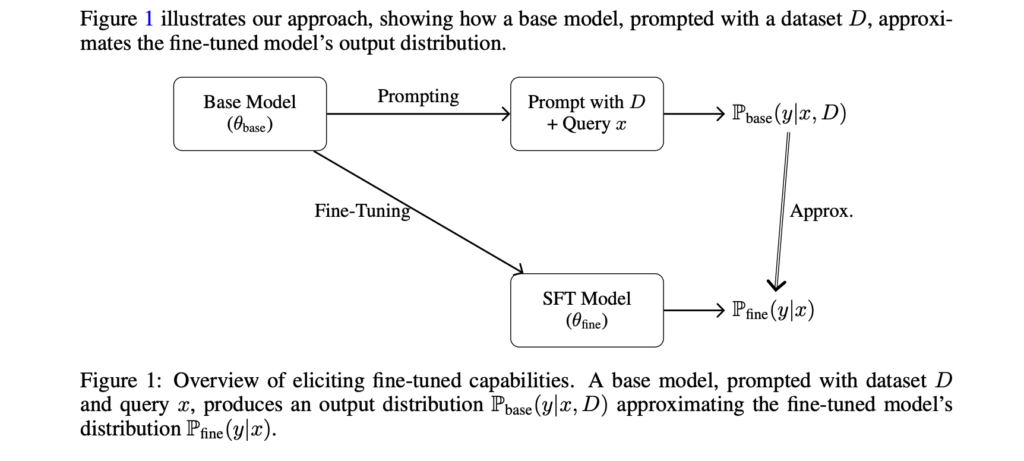

From Fine-Tuning to Prompt Engineering: Theory and Practice for Efficient Transformer Adaptation

Source: MarkTechPost The Challenge of Fine-Tuning Large Transformer Models Self-attention enables transformer models to capture long-range dependencies in...

Combining technology, education, and human connection to improve online learning

Source: MIT News – Artificial intelligence MIT Morningside Academy for Design (MAD) Fellow Caitlin Morris is an architect, artist, researcher,...

A sounding board for strengthening the student experience

Source: MIT News – Artificial intelligence During his first year at MIT in 2021, Matthew Caren ’25 received...

Unpacking the bias of large language models

Source: MIT News – Artificial intelligence Research has shown that large language models (LLMs) tend to overemphasize information...