Researchers from UCLA, UC Merced and Adobe propose METAL: A Multi-Agent Framework that Divides the Task of Chart Generation into the Iterative Collaboration among Specialized Agents

Source: MarkTechPost Creating charts that accurately reflect complex data remains a nuanced challenge in today’s data visualization landscape....

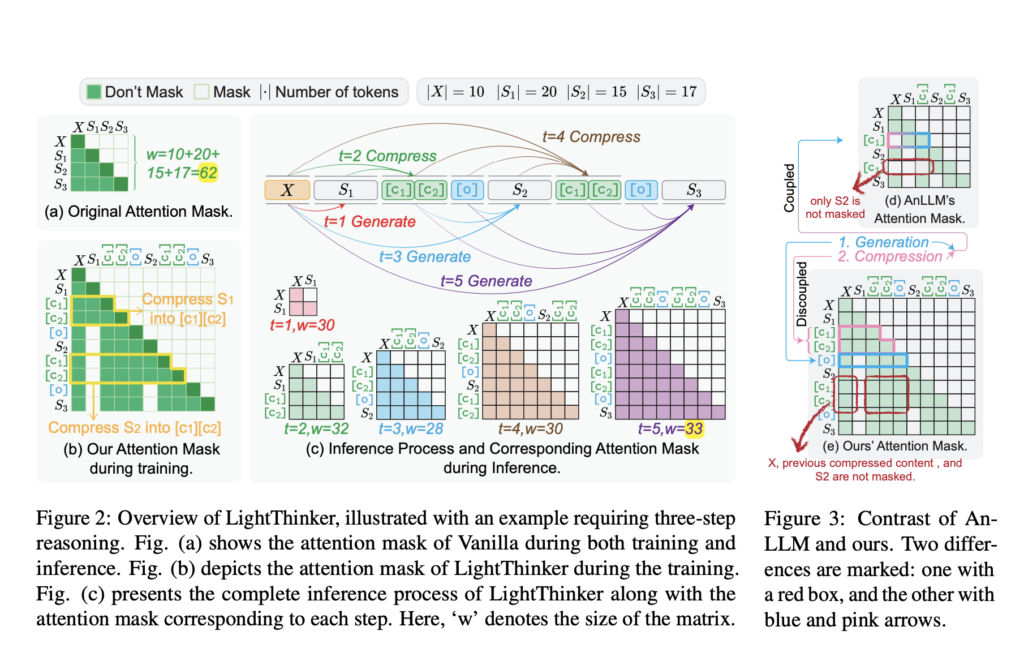

LightThinker: Dynamic Compression of Intermediate Thoughts for More Efficient LLM Reasoning

Source: MarkTechPost Methods like Chain-of-Thought (CoT) prompting have enhanced reasoning by breaking complex problems into sequential sub-steps. More...

Self-Rewarding Reasoning in LLMs: Enhancing Autonomous Error Detection and Correction for Mathematical Reasoning

Source: MarkTechPost LLMs have demonstrated strong reasoning capabilities in domains such as mathematics and coding, with models like...

Stanford Researchers Uncover Prompt Caching Risks in AI APIs: Revealing Security Flaws and Data Vulnerabilities

Source: MarkTechPost The processing requirements of LLMs pose considerable challenges, particularly for real-time uses where fast response time...

A-MEM: A Novel Agentic Memory System for LLM Agents that Enables Dynamic Memory Structuring without Relying on Static, Predetermined Memory Operations

Source: MarkTechPost Current memory systems for large language model (LLM) agents often struggle with rigidity and a lack...

Microsoft AI Released LongRoPE2: A Near-Lossless Method to Extend Large Language Model Context Windows to 128K Tokens While Retaining Over 97% Short-Context Accuracy

Source: MarkTechPost Large Language Models (LLMs) have advanced significantly, but a key limitation remains their inability to process...

Tencent AI Lab Introduces Unsupervised Prefix Fine-Tuning (UPFT): An Efficient Method that Trains Models on only the First 8-32 Tokens of Single Self-Generated Solutions

Source: MarkTechPost Unleashing a more efficient approach to fine-tuning reasoning in large language models, recent work by researchers...

IBM AI Releases Granite 3.2 8B Instruct and Granite 3.2 2B Instruct Models: Offering Experimental Chain-of-Thought Reasoning Capabilities

Source: MarkTechPost Large language models (LLMs) leverage deep learning techniques to understand and generate human-like text, making them...

Google AI Introduces PlanGEN: A Multi-Agent AI Framework Designed to Enhance Planning and Reasoning in LLMs through Constraint-Guided Iterative Verification and Adaptive Algorithm Selection

Source: MarkTechPost Large language models have made remarkable strides in natural language processing, yet they still encounter difficulties...

Thinking Harder, Not Longer: Evaluating Reasoning Efficiency in Advanced Language Models

Source: MarkTechPost Large language models (LLMs) have progressed beyond basic natural language processing to tackle complex problem-solving tasks....