OpenAI Releases a Research Preview of GPT‑5.3-Codex-Spark: A 15x Faster AI Coding Model Delivering Over 1000 Tokens Per Second on Cerebras Hardware

Source: MarkTechPost OpenAI just launched a new research preview called GPT-5.3 Codex-Spark. This model is built for 1...

Is This AGI? Google’s Gemini 3 Deep Think Shatters Humanity’s Last Exam And Hits 84.6% On ARC-AGI-2 Performance Today

Source: MarkTechPost Google announced a major update to Gemini 3 Deep Think today. This update is specifically built...

NVIDIA Researchers Introduce KVTC Transform Coding Pipeline to Compress Key-Value Caches by 20x for Efficient LLM Serving

Source: MarkTechPost Serving Large Language Models (LLMs) at scale is a massive engineering challenge because of Key-Value (KV)...

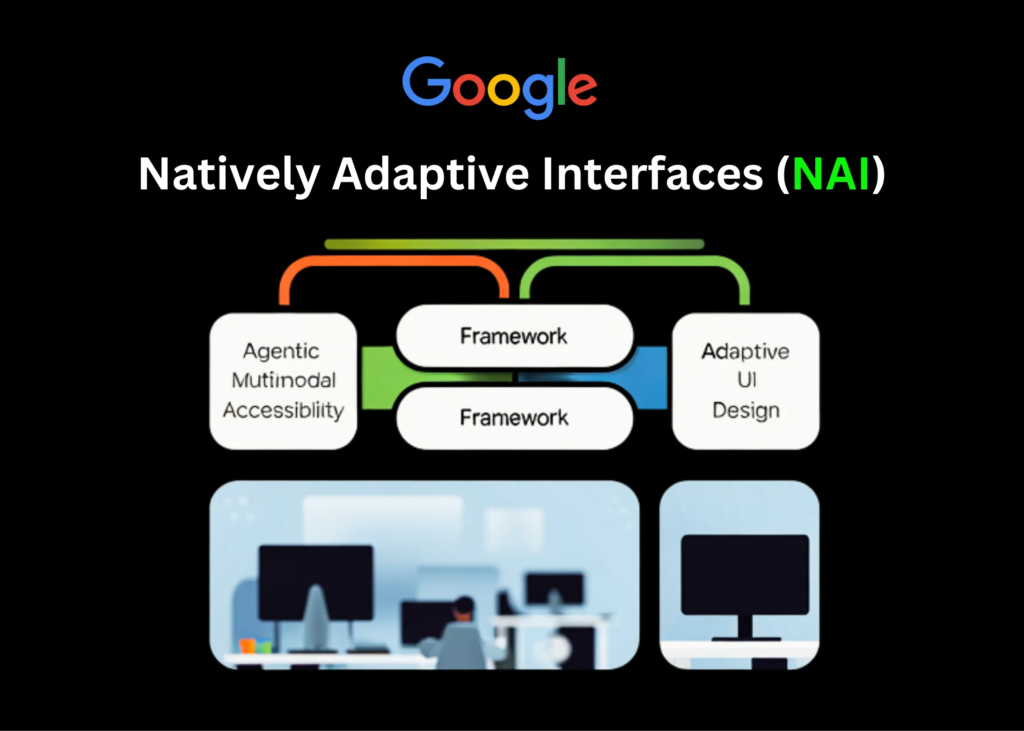

Google AI Introduces Natively Adaptive Interfaces (NAI): An Agentic Multimodal Accessibility Framework Built on Gemini for Adaptive UI Design

Source: MarkTechPost Google Research is proposing a new way to build accessible software with Natively Adaptive Interfaces (NAI),...

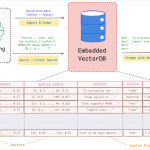

Alibaba Open-Sources Zvec: An Embedded Vector Database Bringing SQLite-like Simplicity and High-Performance On-Device RAG to Edge Applications

Source: MarkTechPost Alibaba Tongyi Lab research team released ‘Zvec’, an open source, in-process vector database that targets edge...

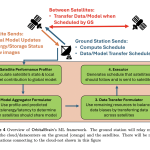

Microsoft AI Proposes OrbitalBrain: Enabling Distributed Machine Learning in Space with Inter-Satellite Links and Constellation-Aware Resource Optimization Strategies

Source: MarkTechPost Earth observation (EO) constellations capture huge volumes of high-resolution imagery every day, but most of it...

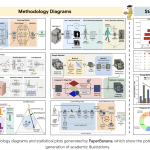

Google AI Introduces PaperBanana: An Agentic Framework that Automates Publication Ready Methodology Diagrams and Statistical Plots

Source: MarkTechPost Generating publication-ready illustrations is a labor-intensive bottleneck in the research workflow. While AI scientists can now...

NVIDIA AI releases C-RADIOv4 vision backbone unifying SigLIP2, DINOv3, SAM3 for classification, dense prediction, segmentation workloads at scale

Source: MarkTechPost How do you combine SigLIP2, DINOv3, and SAM3 into a single vision backbone without sacrificing dense...

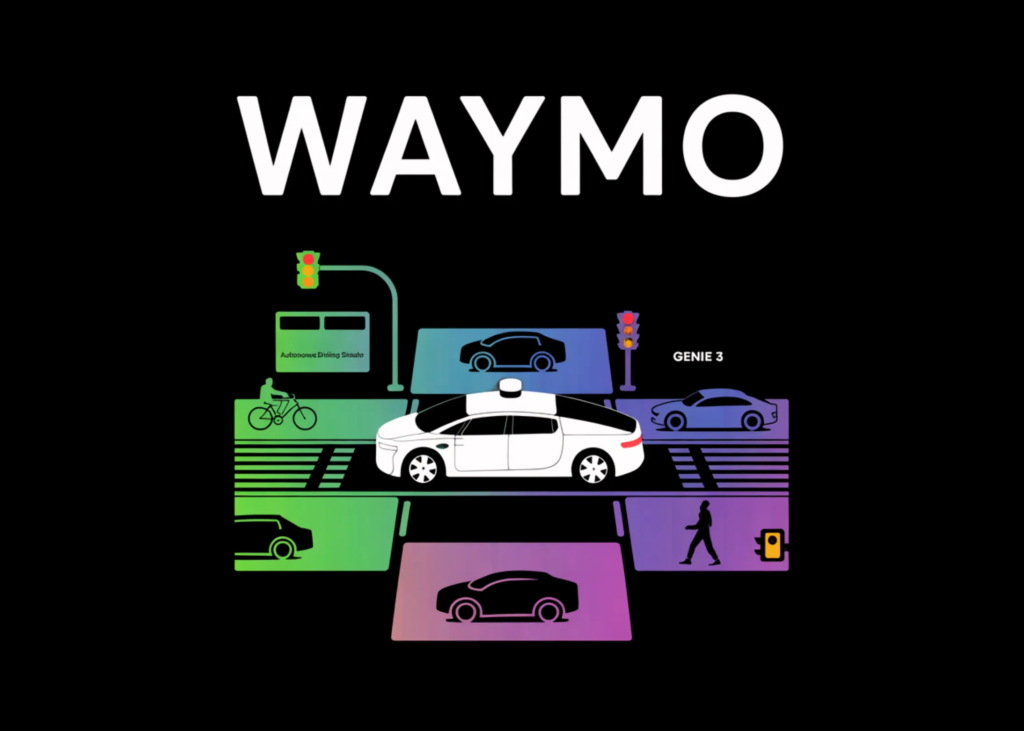

Waymo Introduces the Waymo World Model: A New Frontier Simulator Model for Autonomous Driving and Built on Top of Genie 3

Source: MarkTechPost Waymo is introducing the Waymo World Model, a frontier generative model that drives its next generation...

Anthropic Releases Claude Opus 4.6 With 1M Context, Agentic Coding, Adaptive Reasoning Controls, and Expanded Safety Tooling Capabilities

Source: MarkTechPost Anthropic has launched Claude Opus 4.6, its most capable model to date, focused on long-context reasoning,...