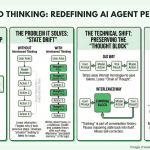

MiniMax-M2: Technical Deep Dive into Interleaved Thinking for Agentic Coding Workflows

Source: MarkTechPost The AI coding landscape just got a massive shake-up. If you’ve been relying on Claude 3.5...

Meta AI Researchers Introduce Matrix: A Ray Native a Decentralized Framework for Multi Agent Synthetic Data Generation

Source: MarkTechPost How do you keep synthetic data fresh and diverse for modern AI models without turning a...

NVIDIA AI Releases Orchestrator-8B: A Reinforcement Learning Trained Controller for Efficient Tool and Model Selection

Source: MarkTechPost How can an AI system learn to pick the right model or tool for each step...

Tencent Hunyuan Releases HunyuanOCR: a 1B Parameter End to End OCR Expert VLM

Source: MarkTechPost Tencent Hunyuan has released HunyuanOCR, a 1B parameter vision language model that is specialized for OCR...

NVIDIA AI Releases Nemotron-Elastic-12B: A Single AI Model that Gives You 6B/9B/12B Variants without Extra Training Cost

Source: MarkTechPost Why are AI dev teams still training and storing multiple large language models for different deployment...

Google DeepMind Introduces Nano Banana Pro: the Gemini 3 Pro Image Model for Text Accurate and Studio Grade Visuals

Source: MarkTechPost Nano Banana Pro, also called Gemini 3 Pro Image, is Google DeepMind’s new image generation and...

Allen Institute for AI (AI2) Introduces Olmo 3: An Open Source 7B and 32B LLM Family Built on the Dolma 3 and Dolci Stack

Source: MarkTechPost Allen Institute for AI (AI2) is releasing Olmo 3 as a fully open model family that...

Meta AI Releases Segment Anything Model 3 (SAM 3) for Promptable Concept Segmentation in Images and Videos

Source: MarkTechPost How do you reliably find, segment and track every instance of any concept across large image...

Meet Kosmos: An AI Scientist that Automates Data-Driven Discovery

Source: MarkTechPost Kosmos, built by Edison Scientific, is an autonomous discovery system that runs long research campaigns on...

Nested Learning: A New Machine Learning Approach for Continual Learning that Views Models as Nested Optimization Problems to Enhance Long Context Processing

Source: MarkTechPost How can we build AI systems that keep learning new information over time without forgetting what...